All the IT companies have to face this challenge: how to install your latest softwares onto the server running them.

Before we answer this simple question, we have to know the environment around these servers.

In a common scenario, IT companies will setup servers in their company network that runs the online business.

Here is an example setup:

|

| A typical online business network |

- The company network is divided by switches and routers. The traffic across (inbound and outbound) the networks and sub-networks are checked by firewalls, IDS/IPS.

- For high availability, your company may have more than one sites that geometrically distributed. In case one site is down for maintenance, upgrading, disaster recovery etc, the other sites can still keep the online business up and running. A DNS server distributes the internet traffic across the sites according to DNS level load-balancing policy. These sites have almost symmetrical network setup, they need techniques such as database ETL, read-only in all sites and write only in one sites to keep them synchronized. Ideally, from outside, they should looks like one site. So let's ignore the site to site difference, and focus on just one site.

- A site is divided into multiple subnets by routers. One of the subnets is internet facing, and at least one server in that subnet needs to have public ip so that the packets from internet can be routed towards their ip. This subnet is usually a DMZ.

- A fleet of application servers are installed in the DMZ, each server got an internal ip.

- A load balancer server is set up in front of this fleet of application servers. One or more public ips are then assigned to this load balancer.

- Once DNS servers solve the domain name to public ip addresses, internet traffic will flow into the load-balancers in the sites, then http/https requests are distributed by the load balancer to the application servers.

- An one public ip load balancer per site is the simplest scenario. The load balancing can happen at DNS level across sites, or at server level across vips, check out this post.

- Most likely these front-end application servers need services from the backend application servers to handle heavy computations, such as validate the sensitive information, provision a new device, authorize a new user, escalate a ticket etc. These front end applications and backend applications are commonly communicated with upper layer protocols such as xml-rpc, soap, rest, or communicated with the aid of a message queue (IBM queue, rabbitmq, Kafka, etc.)

- Unlike the frontend application servers in the DMZ, the backend application servers need higher security, so they are put into different subnets with stricter security policies (stricter firewall rules, longer log retention time, stricter host access policy etc.). A router is needed to route traffic between DMZ and this subnet. Sometimes, a mid-tier load-balancer is used to load balancing backend application servers.

- Finally, the backend and frontend application servers need database access, the database servers are usually put in the most secure company networks, they are usually hardened with live-live backups. Accessing one of them (tertiary for example) needs a set of firewall rules and routing rules to be approved by network administrator. Again, a router is needed to routing the traffic across subnet boundaries. However we don't need multiple routers if the servers in these subnets are geometrically close, we only need one router with multiple network interfaces, and each interface is dedicated to one subnet, and a set of routing rules is configured to each interface.

- The last but not the least, the traffic across (inbound and outbound) the networks and sub-networks are guarded by firewalls/antivirus/IDS/IPS/WAF. They are transparent most of the time, but if you want to add new hosts, open new ports, add new route, access new internet resources, they will reveal themselves, preventing your change, if you don't have a ticket for the network administrators.

Now think again about the simple question: how to install your latest softwares onto the server running them.

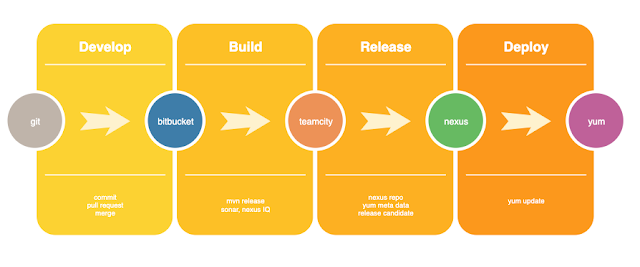

Network is only half of the story, the other half is the software development life cycle (SDLC). An example release cycle will looks like the following:

|

| a typical CI/CD pipeline |

- The marketing team got a new idea, they predict highlighting product features with color will boost the sale.

- A proposal is created and prioritized then project manager starts the feature development with a group of programmers.

- Programmers finished coding, the code is merged into git development branch after peer reviews. This merge triggered a software release process to be run in teamcity, then a rpm is created from the development branch according to the instructions in the pom.xml file. The end effect: a new rpm is uploaded into the maven repository, and Nexus Repository Manager created all the meta data for yum update, expose RPM packages hosted in a Maven repository in the yum repository format. The fast changing QA lab is quite flexible...lets say...when yum update have setup issue...even curl http://yournexusrepo/el5/dev/1.5.69/yourappname.1.5.69_dev.rpm; scp *.rpm pp_appserver_q01:/tmp to the server, then ssh into the server and run sudo rpm -ivU *.rpm is also an acceptable way of deploying the rpm to the QA server...

- QA found bugs, and a few bug fixes are merged into development branch and new rpms installed on the QA server, and finally the QA test passes.

- The tested development branch is then merged into master release. This merge will trigger a software release process to be run in teamcity, then a rpm is created from the development branch according to the instructions in the pom.xml file. The end effect: a new rpm is uploaded into the maven repository, and Nexus Repository Manager created all the meta data for yum update, expose RPM packages hosted in a Maven repository in the yum repository format.

- Now your master release generated rpm is located in http://yournexusrepo/el5/pilot/1.5.69/yourappname.1.5.69.rpm, it is the time for pilot test.

- The pilot is a special QA lab which shares a small amount of production traffic for testing. The pilot application servers are often located in the symmetric network as the production servers, but with smaller scale. They could also share the network in one of the site. For example, imagine your DNS server load balances the internet traffic to 3 symmetric sites. Each site has a load balancer in front of a fleet of application servers. Now imagine you shrink down one site -- give each server less cores/memory/disk, take away 90% of the servers from the fleet, also send less traffic to this site -- this stripped down site now becomes the pilot test lab. If your lab is in one of the site, you just have to split the existing load balancer in DMZ into 2 load balancers, the split is not even, one of the load-balancer got 90% of the application servers, the other got 10% of the servers. The new load-balancer got a new public ip and DNS server can be configured to send 10% of the traffic there. The same splitting can also happen at backend application server fleet and you assign 10% of the servers and traffic to the pilot lab.

- Unlike QA environment, scp rpm to any pilot application server is forbidden by network administrator, the scp command is not in the whitelist, sorry. In case you need to upload/download something, you have to go through a company developed software, with an approved ticket for that action.

- You may feel that installing your latest rpm on server is harder in pilot than in QA, but in fact, pilot servers automatically got your latest rpm from the repo, you need to do nothing. The yum program on the server is configured to check the yum repo for new versions to update. You guess which yum repo it is pointing to?The http://yournexusrepo/el5/pilot/, which is exposed by Nexus Repository Manager.

- The pilot server now automatically installed the latest rpm, the rpm installing process did many magic -- unpack the rpm, copy the jar files to the right location, copy other files to the right locations, run scripts to open or close ports on the server, run script to assume admin then give permissions to the linux account that runs the software, send stop signal to the running processes, so that they stop then get re-started by the monitoring process -- just name a few.

- The pilot took production traffic for a few days and everything looks good. It is the time for production release.

- In order to release a production version, the release management team need to review the test results and the release ticket. Upon approval, the release management will click a button in the teamcity to trigger a production release. The end effect is, your master release generated a rpm in http://yournexusrepo/el5/prod/1.5.69/yourappname.1.5.69.rpm.

- You may wonder what's next for you, the answer is nothing.

- Like pilot setup, the production application servers have yum auto update setup, and they automatically installed the rpms, the deployment is done.

- There are some considerations for the update timings. You don't want all the production servers to run yum update at the same time, which will bring the site down during software restart. You want the rolling deploy, means they update in batches. If your software has incompatible session span across the software versions, rolling deploy will not work for you, because the old version software generated session could be handled by the new version software during the update. In that case, you may need to take a site out of DNS ip pool, waiting for all the existing connections to be drained, then update the new versions on that site. Put the updated site back to DNS ip pool, then update the other sites the same way.

Now, let's migrate our application servers into aws cloud and rethink the simple question: how to install your latest softwares onto the server running them.

Before we answer this simple question, we have to know the environment around these servers, again.

In a common scenario, IT companies will map their existing company network to an aws virtual private cloud (VPC).

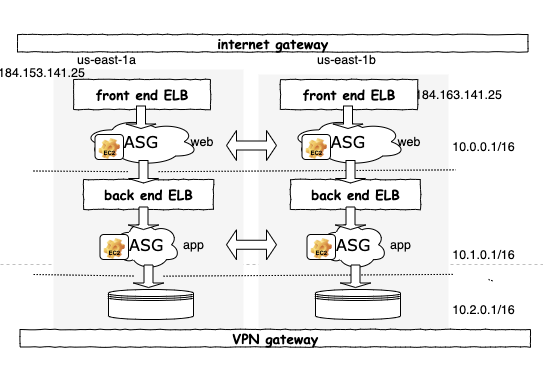

Here is an example mapping:

|

| A typical aws business VPC |

Here is an example mapping:

- The VPC network is divided into subnets. There is no physical router, the aws VPC provided an invisible software router to route the traffic across the subnets. Each subnet has a routing table to set. One routing table is mapped to the routing table for a physical router's one interface. The aws administrator (assume the network administrator get re-hired) attaches an internet gateway to your VPC for internet access, then attaches a Site-to-Site VPN gateway to your VPC for tunnel back to your company network. The administrator also ensures that your network access control and security group rules allow the relevant traffic to flow to and from your instances. There is no physical firewall, their function is mapped to network access control and security group rules.

- For high availability, your company needs redundancy in the network. The multiple sites is mapped to multiple aws availability zones in a particular region. Let's assume your company's users are mainly distributed in unites states east cost, you want to give them the best service. So you put your VPC in region us-east-1, the west coast user will be served a little bit slower since the aws resources are geometrically further away from them. The users in asia will be served even slower due to even longer distance. You can boost the speed by duplicate the VPC in other regions such as us-west-2, etc. There are cost for traffic across regions. That should make sense, right? If your cooperation has 2 physical branches in east and west cost, you should expect higher operation cost. Let's focus one one region, the us-east-1 branch. Each Region has multiple, isolated locations known as Availability Zones. Instead of have 3 sites, you now have 3 availability zones. You set up the subnets in one availability zone, then duplicate the setup to the other 2 availability zones. The VPC will distributes the traffic across the availability zones automatically. There is good and bad about this automation. The good part is: aws do your job to keep the sites synchronized. Availability Zones in a Region are connected through low-latency links as if they are located in the same room. End of the story for cross site data synchronize problem. The bad part is: if the traffic goes into a site is handled by the servers within the same site previously, now you may loose that "feature". An http request can be received by application server in us-east-1a, then passed to the backend application servers in us-east-1b, then passed to the database servers in us-east-1c. Now let's focus on the network in one availability zone.

- The network in an availability zone is divided into multiple subnets. The aws administrator can do that with mouse clicks and keyboard types, you won't see any router or cables. One of the subnets is internet facing, and at least one server in that subnet needs to have public ip so that the packets from internet can be routed towards their ip. This subnet is special in aws too. By default the subnet created by aws users won't have public ip address. In order to create a subnet with public ip address, you need to modify the subnet's ip table, add an extra route to the internet gateway. That's it.

- A fleet of EC2 instances are added in the public subnet, each server got an internal ip.

- An elastic load balancer (ELB) is set up in front of this fleet of application servers. One elastic public ip is assigned to this load balancer. The elastic means, if the ELB is recreated, the ip address will be assigned to the new instance. The fleet is grouped under autoscale group (ASG), so that policy can be applied in case a EC2 is become unavailable or more traffic need to be handled.

- Once DNS servers solve the domain name to public ip addresses, internet traffic will flow into the load-balancers in the availability zones, then http/https requests are distributed by the ELB to the application servers.

- The load balancing is handled by aws, they have infinite amount of resources for scaling up.

- Most likely these front-end application servers need services from the backend application servers to handle heavy computations, such as validate the sensitive information, provision a new device, authorize a new user, escalate a ticket etc. These front end applications and backend applications are commonly communicated with upper layer protocols such as xml-rpc, soap, rest, or communicated with the aid of a message queue (IBM queue, rabbitmq etc.)

- Unlike the frontend application servers in the public subnet, the backend application servers need higher security, so they are put into different subnets with stricter security policies (stricter firewall rules, longer log retention time, stricter host access policy etc.). Extra ip table rules are needed in the routing tables of public subnet and this subnet to route the traffic back and forth.

- Finally, the backend and frontend application servers need database access, the database servers are usually put in the most secure company networks, they are usually hardened with live-live backups. Accessing one of them (tertiary for example) needs a set of firewall rules and routing rules to be approved by network administrator. Again, routing table rules are needed to route the traffic across subnet boundaries.

- The last but not the least, the traffic across (inbound and outbound) the VPC/internet/VPN and sub-networks are guarded by firewalls/antivirus/IDS/IPS/WAF/ACL/security group rules/IAM. They are transparent most of the time, but if you want to add new EC2, open new ports, add new route, access new internet resources, they will reveal themselves, preventing your change, if you don't have a ticket for the aws administrators.

- Let's assume your github, teamcity, yum repo are still located inside your company network outside the aws. Then you need to set up a site-to-site VPN between the VPC and your company network, so that packets can be routed between the yum repo and the prod servers. The firewall rules need to be setup in the company network side and ACL/security group rules need to be setup in the VPC side.

Once the company network is mapped to VPC. The SDLC half of the story is the same as before.

No comments:

Post a Comment