Cloud assets have an important difference comparing to physical assets. While physical hosts are permanent, the EC2 instances are temporary.

When we make an inventory for the physical network assets, we want to know these information:

| |

|

- what's the current inventory snapshot.

- for each host, what is the hostname, ip address, device type, operation system, owner, etc.

- when a host is added or removed from the inventory.

So we need to store a set of versioned inventory snapshots. The inventory increases the version when some hosts change at a particular timestamp.

If we apply the same strategy to inventory aws assets, it most likely won't work. First of all, the aws assets changes too often, the ASG groups are adding and deleting EC2 instances all the time, you need to store tones of snapshots for these changing history. Even though you can catch up with the constant changing cloud, why do you bother to store the hostname and ip address of an EC2 instance that only lived 1 minute in an ASG? We should be more concerned about long lived items such as Elastic load balancer, ASG, tagged assets, etc. We should store hostname, ip address, device type, operation system, owner, group member count, owner, availability zone etc.

How do we know if a concerned item changes, we don't want to know the change of every EC2 instances for sure. One solution is to take snapshots periodically, only store a new version when the new snapshot is different than the current one. Another solution is to have the cloudtrail inform us about these changes, then we make a new snapshot. We can configure the cloudtrail to send events to CloudWatch Logs. CloudTrail supports sending data, Insights, and management events to CloudWatch Logs. An event such as "eventName": "CreateLoadBalancer" should trigger a new inventory snapshot to be made. We can have the cloudwatch logs consumed by a Lamda function, then triggered a message to be send to SQS. Then the listening application can take action accordingly. A more sophisticated approach is to have a java/python application call the cloudwatch api for the logs. The application then processes these logs to store information and create events. One of the events is -- the assets we concerned with just changed, our program should submit a job to the job queue for making a new inventory snapshot.

The inventory information can be retrieved by describing the ELBs, ASGs and EC2s with tag as the filter. A worker thread can pick up the inventory snapshot job, have a new snapshot file created and ETLed to the blobstore.

The inventory information can be retrieved by describing the ELBs, ASGs and EC2s with tag as the filter. A worker thread can pick up the inventory snapshot job, have a new snapshot file created and ETLed to the blobstore.

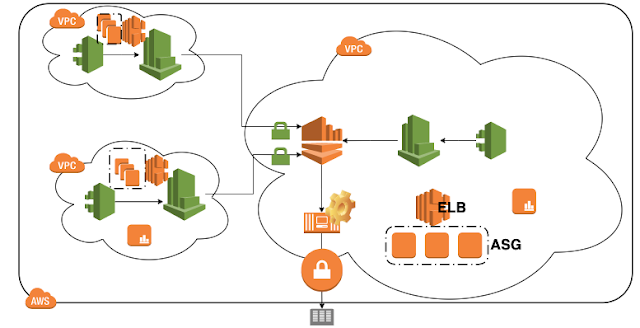

This is a basic setup. If you have multiple aws accounts across multiple regions, you need to iterate through the ARNs and regions in order to check the cloudwatch logs or make the inventories, then merge them into one snapshot. You can also configure the cloudwatch logs in different aws accounts to be consumed by to the Kinesis streams in one account. Kinesis streams are currently the only resource supported as a destination for cross-account subscriptions. Then the Kinesis shards can be downloaded in parallel for the logs. Setup the cloudtrail and cloudwatch in customers' aws account don't have to be manual. We can have the program assume across account role, then call cloudformation api to have the trail and cloudwatch created then subscribed to the cloudwatch destination of the kinesis stream in primary account, if the cloudwatch haven't been found there.

No comments:

Post a Comment